The Two Viral Strategies for Using AI to Make Shorts on YouTube (Format vs. Script)

Article Summary: This guide analyzes the two prevailing approaches to creating viral AI-generated YouTube Shorts: "Format-Driven Virality" and "Script-Driven Virality". It details the significant differences between these two types—including creative mindset, production barrier, traffic acquisition mechanism, and commercial value—providing creators with a clear roadmap to maximize views and monetization potential.

ℹ️ Please note: The trends, timelines, and RPM estimates discussed in this article are based on market analysis conducted in November 2025.

The landscape of AI-generated YouTube Shorts is currently dominated by two primary strategies: "Format-Driven Hits" and "Story-Driven Hits." These approaches differ significantly in their creative philosophy, production barriers, traffic acquisition mechanisms, and commercial value.

I. Format-Driven Hits: Capitalizing on Sensory Impact and Visual Formulas

Core Concept: The goal of a Format-Driven Hit is to capture audience attention within the critical first 1-2 seconds of a Short using visual spectacle, fast-paced editing, or formulaic elements. It relies on sensory impact to encourage subconscious repeat viewings, driving up completion rates and the crucial "Viewed vs. Swiped Away" metric.

Production & Risk: The barrier to entry is low, production costs are minimal, and feedback is rapid. However, the content's lifecycle is often short, and channels risk being penalized or removed by the platform for producing highly homogenized content.

| Trend Type | Popularity Timeline | Core Concept & IP/Topic | Production Difficulty & Risks | Traffic & Monetization Characteristics |

|---|---|---|---|---|

| AI Pets / 'Finger' Pets |

Before Oct 2024 |

Capitalized on early AI hype. Used AI-generated cats/dogs for simple dances or interactions. |

Low difficulty, but trend is over and the niche is now highly saturated. |

High initial traffic, but RPM is typically low as it's general entertainment content. |

| AI Baby Runway / Stories |

Oct 2024 - Mar 2025 |

Used AI to create baby characters for fashion shows or simple stories, focusing on cuteness and novelty. |

Extremely High Risk. Easily violates YouTube's child safety policies, leading to YPP restrictions or channel termination. Strongly advise against this category. |

High traffic potential, but ad placements are narrow due to the child-oriented audience, resulting in low YPP income. |

| AI Style Transfer (Image-to-Image / Transformation) |

Oct 2024 - Ongoing |

Uses editing tools like CapCut to create mesmerizing transitions between two different images (e.g., cartoon to realistic). Videos are often very short. |

High production efficiency, but requires effort to differentiate. Relies on keyframe and mask editing skills. |

Extremely high completion rates (avg 2+ views per viewer), high viral potential. Audience is heavily in India/Indonesia, leading to lower RPM. |

| AI 'Got Talent' Transformations |

Nov 2024 - Apr 2025 |

Exaggerated, impossible transformations or dance performances, leveraging the 'Got Talent' show format. |

High Copyright Risk. Using original show footage (like judges' reactions) can lead to copyright strikes. Format has evolved to magic acts. |

High viral rate; a classic strategy for rapid initial growth. |

| IP Element Fusion (K-pop, Jesus, Mythology) |

May 2025 - Present |

Integrates popular IP elements into format-driven content, e.g., K-pop idols in funny situations, miniature scenes with Jesus, or mythology. |

Moderate difficulty. Success depends on accurately rendering the IP and skilled use of AI tools. Must avoid depictions that could alienate fanbases. |

The IP brings its own audience, facilitating easy sharing. Offers limitless creative potential by combining elements (e.g., K-pop + ASMR). |

| ASMR Slicing / Miniature Worlds |

May 2025 - Present |

Leverages satisfying sensory elements (slicing, eating) or novel perspectives (a tiny person exploring a human organ or food). |

Moderate difficulty. Requires high-quality visuals & sound. Miniature worlds have huge imaginative potential and can be differentiated. |

Strong sensory triggers lead to high shareability. Can be expanded into educational or commentary content to increase value. |

| CCTV / New-Gen AI Video |

Oct 2025 - Present |

Utilizes realistic text-to-video AI tools (Sora, Veo) to create CCTV-style footage of animal attacks/rescues or vlogs. |

Extremely low barrier to entry (prompt-dependent). A dividend from tool advancements. Disclosing AI use is crucial. |

Massive traffic potential during this new-format honeymoon period. |

| Multi-Panel / Split-Screen / Looping |

Evergreen (Elemental) |

Uses layouts like four-panel grids, seamless loops, or split screens to increase visual density or trick users into repeat views. |

Low editing difficulty; a common efficiency-boosting technique. If core content is weak, can be flagged as low-quality content. |

A technical way to boost watch time, but must be paired with strong IP or other viral elements to sustain momentum. |

⚠️According to YouTube's official Community Guidelines, content that is 'harmful or dangerous,' especially concerning minors, is strictly prohibited and can lead to immediate channel action.

Video Examples by Paradigm

AI Pets / 'Finger' Pets

AI 'Got Talent' Transformations & ASMR Slicing

II. Story-Driven Hits: Driven by Narrative Structure and Emotional Value

Core Concept: Story-Driven Hits focus on a complete narrative arc, conflict, and emotional engagement. The goal is to draw the viewer into a well-designed plot, using storytelling or information delivery to maximize watch time. While these videos are often longer with more complex scenes, they must establish a powerful hook within the first 1-3 seconds to prevent viewers from swiping away.

Production & Risk: The production barrier is higher, requiring a solid grasp of scriptwriting, storyboarding, and pacing. However, these formats have a longer lifespan and more sustainable revenue. Risks are primarily related to content policy violations (e.g., graphic or disturbing content) and homogenization.

| Trend Type | Popularity Timeline | Core Concept & IP/Topic | Production Difficulty & Risks | Traffic & Monetization Characteristics |

|---|---|---|---|---|

| Animal Stories / Rescues |

Jan 2025 - Present |

Classic narrative structure (distress + rescue + resolution), using animals to evoke empathy and emotional resonance. |

Medium difficulty. Requires realistic visuals & color grading to reduce AI look. Niche rewards attention to detail. Avoid content showing animals in danger. |

Huge, evergreen traffic. However, RPM is generally low, especially with audiences in India/Indonesia. |

| Abstract / Bizarre / Indian Folk Tales |

Apr 2025 - Present |

Simple, often bizarre plot logic with sudden twists or wish- fulfillment (e.g., revenge). Style is often exaggerated 3D or hyper-realistic. |

High Risk. Very likely to trigger guideline violations for "shocking or disgusting content," leading to channel removal. |

Extremely fast traffic explosion, potential for 100M+ views. However, RPM is extremely low (e.g., ~$0.01 for Indian audiences). |

| Mythology / K-pop Stories |

May 2025 - Present |

Uses popular IP (e.g., Chinese mythology, K-pop) to replicate or adapt viral live-action scripts. Themes: revenge, redemption, etc. |

Advanced, competitive niche for high-level creators. Success lies in adapting the core script structure ("keeping the structure, changing the variables"). |

IP provides built-in traffic. K-pop content yields a relatively higher RPM, often earning $200-$500 per 10M views. |

| Live-Action Replication (De-emphasizing AI) |

Jun 2025 - Present |

Finding viral scripts from TikTok and "re-skinning" them with AI visuals. Choose plot-driven videos, avoiding complex human actions. |

Difficulty depends on ability to deconstruct & adapt the script. A key strategy to break past the 10k view ceiling & improve quality. |

Replicating market-validated scripts increases hit probability. Commercial value depends on the new IP or language used. |

| Language-Specific AI Stories |

Sep 2025 - Present |

Story-driven content with voice- overs or subtitles in specific languages (English, Spanish, etc.). Scripts can be adapted from hits. |

Workflow adds high-quality voice-overs (e.g., ElevenLabs) and multi-language translation, requiring high accuracy. |

High RPM potential. Allows targeting of high-value geo audiences. An English story Short has a much higher RPM than non-verbal content. |

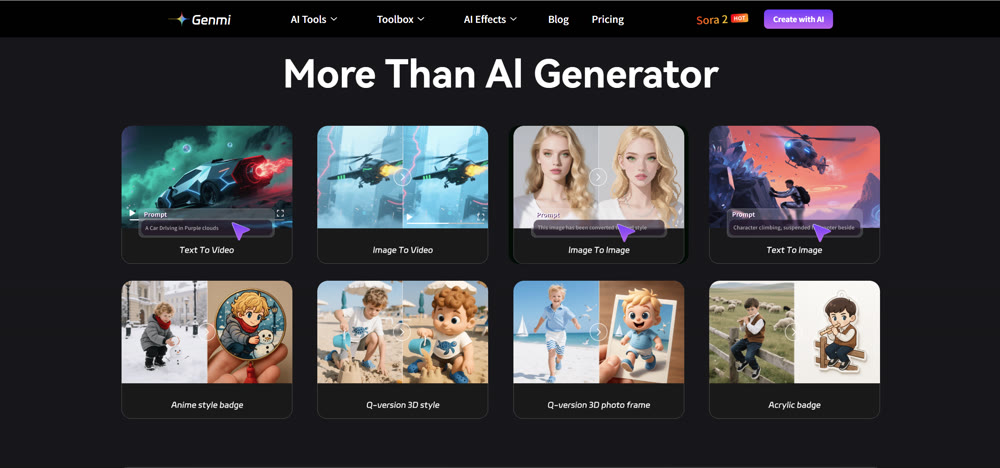

From Strategy to Execution with Genmi AI

Understanding these two paths—Format vs. Story—is the first step. The next is execution. While format-driven content offers a quick path to initial views, story-driven content builds a more sustainable channel with higher long-term value. This is where the true power of AI comes to life.

Creating compelling, story-driven shorts requires powerful and flexible tools. You need to move beyond simple visual tricks and start telling stories. With Genmi AI, you can transform detailed scripts into captivating scenes using advanced text-to-video generation, or bring static concepts to life by turning your key images into dynamic narratives with our image-to-video technology.

Stop chasing short-lived trends. Start building a library of high-quality, story-driven content that resonates with audiences and advertisers alike. Explore Genmi AI today and unlock the next generation of AI-powered storytelling.

The same assets can work on multiple platforms. Knowing how to monetize TikTok allows you to double your potential revenue.

Recommended Articles

DeeVid AI Review: A Deep Dive into Its Strengths and Limitations for Professional Video

Is DeeVid AI worth it for professional video? Our in-depth review tests its features, performance, and quality to see if it meets cinematic standards.

From 0 Views to Viral: A Data-Driven Troubleshooting Guide for AI-Generated YouTube Shorts

A step-by-step guide to diagnose why your AI-generated YouTube Shorts have low views. Fix throttling, CTR, and AVD issues with our data-driven framework.

From Script to Viral Hit: The Ultimate Guide to Creating AI-Powered YouTube Shorts Blockbusters

Unlock viral growth on YouTube Shorts by mastering AI scriptwriting. This guide details how to turn story ideas into viral videos using AIGC tools and data.